Events - processing of events

An event mechanism has been designed to make extending and overlapping of the CzechIdM core functionality within any module possible.

The event (EntityEvent) is published via EntityEventManager from different places in the application (⇒ hook). A number of processors can react to the event (AbstractEntityEventProcessor) in a defined order (number ⇒ the smaller it is, the sooner the processor is run). Processors run synchronously at default and in one transaction (see next section). Processors with the same order will be run in a random order (OrderComparator) - it's good practice to design and set different processor's order (think about it in design). Instead of the annotation @Order, the method getOrder needs to be overloaded (see the example). Event content could be any Serializable object, but AbstractDto descendant is preffered - see original source lifecycle feature. Event content is required, event without content couldn't exist.

Event lifecycle

- event is created with given content <code java> EntityEvent<IdmIdentityDto> event = new IdentityEvent(IdentityEventType.UPDATE, updateIdentity); </code>

- then is published via

EntityEventManager<code java> EventContext<IdmIdentityDto> context = entityEventManager.process(event); </code> - when event is published and their content is descendant of

AbstractDto, then original source is filled to the event - original source contains previously persisted (original) dto and could be used in "check modification" processors. If event creating new dto, then original source isnull. Original source could be set externally - then no automatic filling occurs. - returning context contains results from all reacting processors in defined processors order.

- the processor can label the event as (

closed) or (suspended) and therefore skip all the other processors. If the suspended event is published again viaEntityEventManager, the processing will continue where it was suspended, if context (with processed results) is preserved. If the processing of the event is (suspended), the called method should return the adequateacceptedstate. - when event walk through procesors, then event's processed order is incremented - this order is used after event suspending and run again - event proccessing will continue with processor with next order.

Supported events

Supported events for individual entities:

IdmIdentityDto- operation with the identityIdmRole- operation with the roleIdmRoleCatalogueDto- operation with the role catalogueIdmIdentityRoleDto- assigning a role to the userSysProvisioningOperation- provisioning operation.SysSyncConfig,SysSyncItemLog- synchronizationIdmIdentityContractDto- labor-law relationIdmRoleTreeNodeDto- automatic roleIdmTreeNode- tree structure nodeIdmPasswordPolicy- password policy

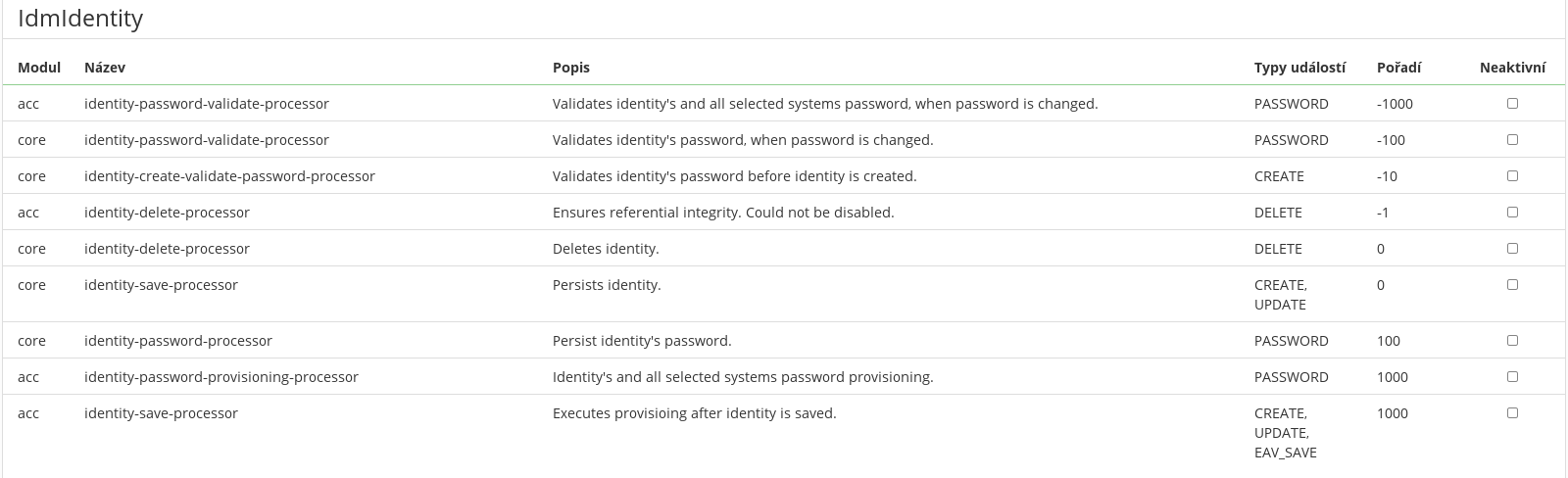

A page has been created directly in the application on the module page for an overview of all entity types and event types migrating through event processing. All the registered processors including the configuration are listed there:

The default order for listeners (- ⇒ before, + ⇒ after):

core: 0provisioning: 1000

Event types

Event types has to be compared by their string representation, NOT by instance. Concrete event types e.q. IdentityEventType are used for documentation reason only - which domain type supports which event. Event types can be added in different modules with different type, but processor can react across all module (⇒ is registered to string event type representation eventType.name()).

... EntityEvent<IdmIdentityDto> event = new CoreEvent<IdmIdentityDto>(CoreEventType.CREATE, identity); if (IdentityEventType.CREATE.name() == event.getType().name()) { // do something } ...

Basic interfaces

EntityEvent- an event migrating through the processors. The content of the event can beBaseEntity,BaseDtoor any serializable content.EventContext- holds the context of the processed event - which processors it has been processed by, with what results, if the processing is suspended, closed, etc.EventResult- the result of processing of the event by one processor.EntityEventProcessor- event processor. Processor has to have unique identifier by module.EntityEventManager- ensures publishing of the event to processors.

Basic classes

AbstractEntityEvent- an abstract event migrating through the processors - when adding a proper one can be simply inherited from.DefaultEventContext- the default context of the processed event - all abstract and default events and processors use it.DefaultEventResult- The default event result processed by one processor - all abstract and default events and processors use it.AbstractEntityEventProcessor- abstract event processor - when adding a proper one can be simply inherited from.AbstractApprovableEventProcessor- the event processor will send the whole event with dto (or serializable) content to WF for approval. It is necessary to configure the definition of the WF where the event will be sent to.DefaultEntityEventManager- ensures publishing of the events to processors.

Transactions

Transactional processing is controlled before the event publishing itself - the whole processing now takes place in a transaction and all processors run synchronously. In case of an error in any processor, the whole transaction is rolled back, which has some advantages:

- simple adding of validation or referential integrity

- repeating the whole chain

and disadvantages as well:

- having to catch all the exceptions properly to avoid "breaking the chain"

- saving logs and archives in the new transaction (

Propagation.REQUIRES_NEW)

Predefined processors order

- 0 - basic / core functionality - operation

save,deleteetc. - 100 - automatic roles computation

- 1000 - after

saveprovisioning. - -1000 - before

deleteprovisioning (before identity role is deleted). - Identity:

- -1000 - validate password

- 100 - persist password

- Provisioning:

- -5000 - check disabled system

- -1000 - compute attributes for provisioning (read attribute values from target system)

- -500 - check readonly system

- 0 - execute provisioning (create / update / delete)

- 1000 - execute

afterprovisioning actions (e.g. sends notifications) - 5000 - archive processed provisioning operation.

Other orders can be found directly in aplication, see supported envent types.

Processor configuration

Processors can be configured through Configurable interface by standard application configuration.

Implemented processors

Automatic roles processors

## ## approve create automatic role idm.sec.core.processor.role-tree-node-create-approve-processor.enabled=true # wf definition idm.sec.core.processor.role-tree-node-create-approve-processor.wf=approve-create-automatic-role ## ## approve delete automatic role idm.sec.core.processor.role-tree-node-delete-approve-processor.enabled=true # wf definition idm.sec.core.processor.role-tree-node-delete-approve-processor.wf=approve-delete-automatic-role

Notification on change monitored Identity fields

- Check if defined fields on identity was changed. If yes, then send notification.

- As default is used this system template identityMonitoredFieldsChanged.

- Extended attributes is not supported now.

- Order of processor is Integer.Max - 100. We want to send notification on end of chain (after identity is presisted or provisioning are completed).

# Identity changed monitored fields - Check if defined fields on identity was changed. If yes, then send notification. # Default is disabled idm.sec.core.processor.identity-monitored-fields-processor.enabled=false # Monitored fields on change (for Identity, extended attributes are not supported) idm.sec.core.processor.identity-monitored-fields-processor.monitoredFields=firstName, lastName # Notification will be send to all identities with this role idm.sec.core.processor.identity-monitored-fields-processor.recipientsRole=superAdminRole

Example

If I want to get myself hooked after deleting the identity, I should implement a processor to the event type IdentityEventType.DELETE with an order number higher than 0:

@Enabled(ExampleModuleDescriptor.MODULE_ID) @Component("exampleLogIdentityDeleteProcessor") @Description("Log after identity is deleted") public class LogIdentityDeleteProcessor extends AbstractEntityEventProcessor<IdmIdentityDto> { /** * Processor's identifier - has to be unique by module */ public static final String PROCESSOR_NAME = "log-identity-delete-processor"; private static final org.slf4j.Logger LOG = org.slf4j.LoggerFactory .getLogger(LogIdentityDeleteProcessor.class); public LogIdentityDeleteProcessor() { // processing identity DELETE event only super(IdentityEventType.DELETE); } @Override public String getName() { // processor's identifier - has to be unique by module return PROCESSOR_NAME; } @Override public EventResult<IdmIdentityDto> process(EntityEvent<IdmIdentityDto> event) { // event content - identity IdmIdentityDto deletedIdentity = event.getContent(); // log LOG.info("Identity [{},{}] was deleted.", deletedIdentity.getUsername(), deletedIdentity.getId()); // result return new DefaultEventResult<>(event, this); } @Override public int getOrder() { // right after identity delete return CoreEvent.DEFAULT_ORDER + 1; } }